Layered Neural Rendering for Retiming People in Video

| 1 Google Research | 2 VGG, University of Oxford |

| | Paper | Video | Code | |

|

||

| Original Video (Jump separately) |

Our Retimed Result (Jump together!) |

|

Making all children jump into the pool together — in post-processing! In the original video (left) each child is jumping into the pool at a different time. In our computationally retimed video (right), the jumps are aligned such that all the children jump together into the pool (notice that the child on the left remains unchanged in the input and output videos). In this paper, we present a method to produce this and other people retiming effects in natural, ordinary videos. |

|||

|

|||

| Background Layer | Layer 1 | Layer 2 | Layer 3 |

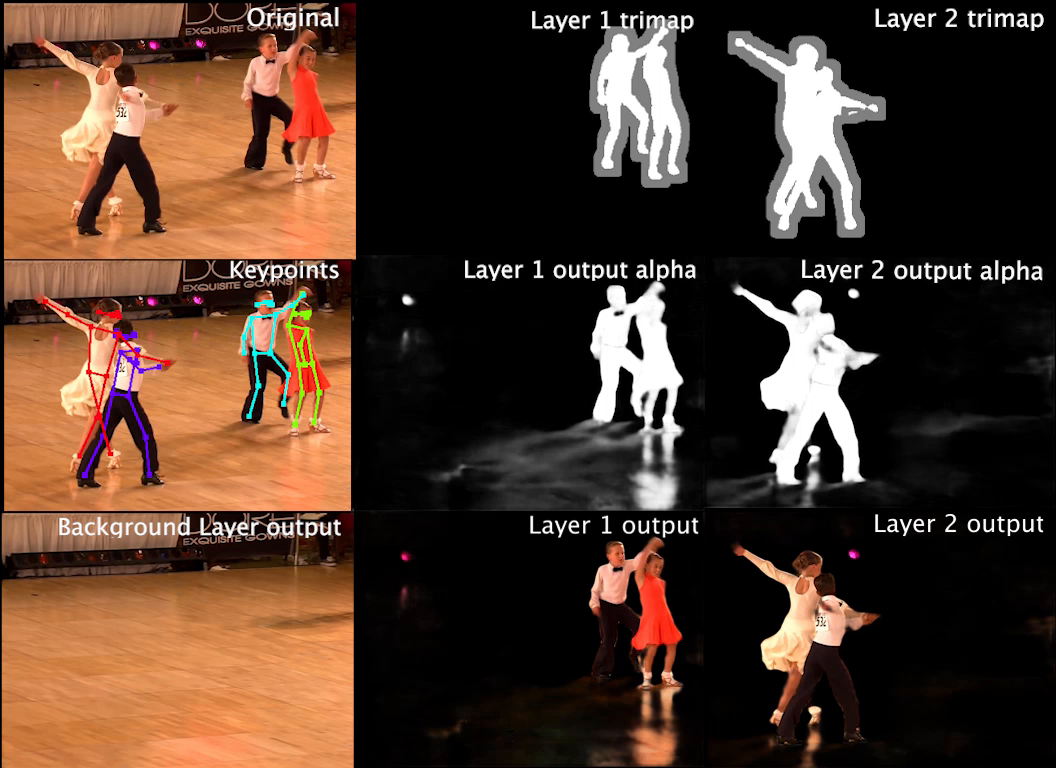

Decomposing a video into layers. Our method is based on a novel deep neural network that learns a layered decomposition of the input video. Our model not only disentangles the motions of people in different layers, but can also capture the various scene elements that are correlated with those people (e.g., water splashes as the children hit the water, shadows, reflections). When people are retimed, those related elements are automatically retimed with them, which allows us to create realistic and faithful re-renderings of the video for a variety of retiming effects. |

|||

Abstract

We present a method for retiming people in an ordinary, natural video — manipulating and editing the time in which different motions of individuals in the video occur. We can temporally align different motions, change the speed of certain actions (speeding up/slowing down, or entirely "freezing" people), or "erase" selected people from the video altogether. We achieve these effects computationally via a dedicated learning-based layered video representation, where each frame in the video is decomposed into separate RGBA layers, representing the appearance of different people in the video. A key property of our model is that it not only disentangles the direct motions of each person in the input video, but also correlates each person automatically with the scene changes they generate — e.g., shadows, reflections, and motion of loose clothing. The layers can be individually retimed and recombined into a new video, allowing us to achieve realistic, high-quality renderings of retiming effects for real-world videos depicting complex actions and involving multiple individuals, including dancing, trampoline jumping, or group running.

Paper

|

Layered Neural Rendering for Retiming People in Video |

Supplementary Material

|

Code

|

[code] |

Acknowledgements. The original "Ballroom" video belongs to Desert Classic Dance. This work was funded in part by the EPSRC Programme Grant Seebibyte EP/M013774/1.